Are you working on a project in AI, data science, or HPC? If the answer is yes, then the odds are good that your computing can be GPU accelerated. You need the right solution - both hardware and software. An NVIDIA Data Science Workstation is the answer.

You have a special problem. You need a special solution.

AI, data science, and HPC technologies are being applied to some of the world's hardest computing problems. To solve those problems you need fine-tuned hardware, specialized software, and guaranteed, professional support. An NVIDIA Data Science Workstation from Scan Computers fits the bill.

Are you training neural networks? Are you trying to gain actionable insights from your IoT data? Are you modelling at an atomic level? These problems and many others like them require processing large amounts of data and plenty of computing power.

A Data Science Workstation like the 3XS from Scan Computers is tailor-made for solving these problems. It's configured with fast, enterprise-grade hardware and a complete software stack for GPU accelerated AI and data science. It's backed up with support from the experts at Scan and NVIDIA.

By combining specialised hardware and pre-configured software, a Data Science Workstation will integrate into your project quickly and seamlessly.

Scan Computer's 3XS Data Science Workstation is a specialised solution for data scientists.

I spent a fair amount of time with Scan's 3XS Data Science Workstation. You can read about the workstation, the testing and the system performance. In this article, I will touch on the workstation hardware details, however, the focus here is on the pre-configured software tools and applying this system to your computing problems.

Let's get started.

Why do you need a Data Science Workstation?

For those who don’t have on-going projects in AI or data science, you may wonder how other companies are using these technologies. Imagine processes in your own organizations which rely on teams with expert knowledge to perform repetitive and time-consuming tasks, then you could have a good candidate for AI.

Whereas workstation applications in CAD, design, and special effects address specific users in manufacturing, product development, film, etc, AI and data science, on the other hand, span broad ranges of industry. Here are a few examples.

In manufacturing, AI is being used to take computer vision and quality assurance to new levels. AI projects train systems to recognize manufacturing defects in complex manufacturing processes and assemblies. AI is being used to verify production quality for products with a large number of customer build options.

AI can be trained to recognize manufacturing failures in cases which are difficult to measure. Examples include complicated assemblies, welding quality, electrical components, and semiconductor wafers. AI technology can verify production of products with thousands of options, assuring that the correct options are being built for the customer.

Financial institutions are using AI to detect fraudulent transactions. Using volumes of historical transaction data, they can produce systems to flag potentially fraudulent transactions and dramatically reduce fraud in real-time.

Telecommunication companies use data science and AI in many areas. Call clarity and customer satisfaction can be improved with AI-based noise reduction.

The Data Science Workstation integrates seamlessly into running projects.

Transmission infrastructure networks can be optimized for coverage and cost. Monitoring and maintenance operations are being improved by tracking real-time weather events and disturbances.

Retail companies face traditional challenges in inventory management, forecasting, and logistics. They apply technology to manage 100s of thousands of products in 10’s of thousands of stores. Innovative companies are applying image recognition and AI to create autonomous, automated checkout services which give shoppers a faster, more convenient shopping experience.

Healthcare is a challenging field which Is using data science and AI in various ways. Companies are creating field-operable devices for DNA sequencing to allow discovery of pathogens more rapidly. Data science techniques are being applied to the development of drugs and vaccines. And AI tools and data analysis are instrumental in delivering improved patient diagnostics to doctors at the point of care.

Pre-configured and tested for AI and Data Science

The Scan 3XS Data Science Workstation is pre-configured with a thoroughly tested, GPU-accelerated, software stack. The pre-configured software is tailored to data science and AI.

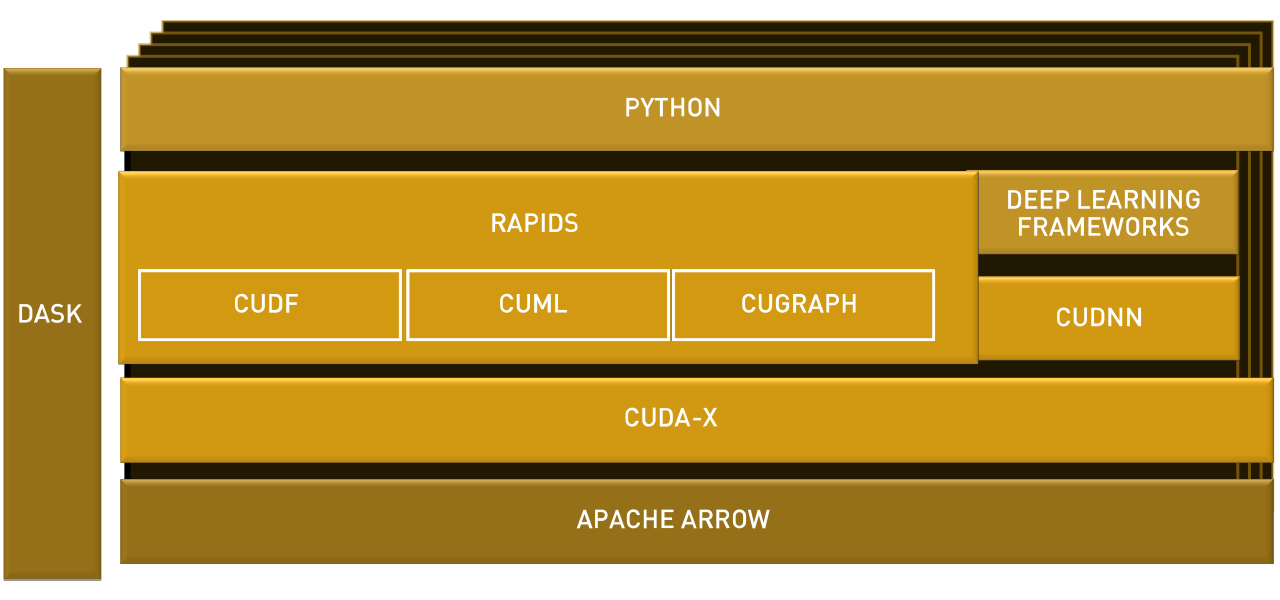

The core of the GPU-acceleration for AI and data science comes from RAPIDS. A properly configured AI & data science workstation needs RAPIDS, DASK, CUDA-X, Python, multiple deep learning frameworks, and GPU-optimized libraries. NVIDIA Docker containerization, which simplifies development and deployment with GPU acceleration, is included in the Scan DSW software stack.

The benefit of Scan doing all of this configuration work is simple. I pushed the 3XS power button and in fewer than 15 minutes, I was logged into the NVIDIA GPU Cloud (NGC) and downloaded my first dataset. Compare that to hours, if not days, in order to properly configure Python, Docker, CUDA, RAPIDS, and the needed frameworks.

Given the completeness of the pre-installed software stack, it is very likely that you will be running existing projects in a similarly short timeframe. This makes adding the performance that the Scan system offers into running projects very simple, if not seamless.

The 3XS Data Science Workstation software stack. Source: NVIDIA

The software stack for the data science workstation is comprehensive. Data scientists can access tools and interfaces like Python with which they are already familiar. Many deep learning frameworks are included. The core software accelerating data science and AI lies in RAPIDS, an open-source collection of GPU-accelerated libraries and APIs for data science and artificial intelligence.

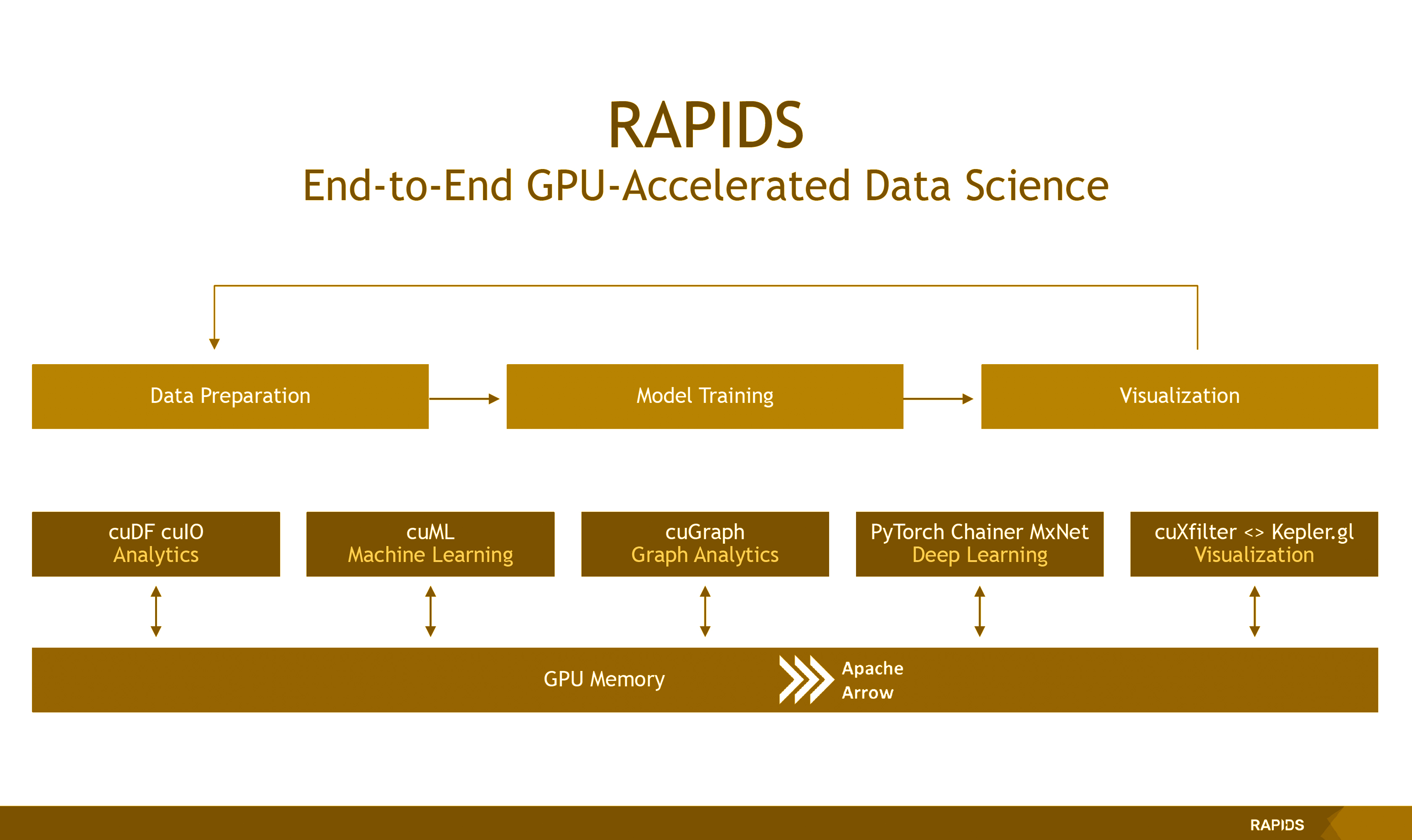

RAPIDS lies at the heart of the software stack

As mentioned, RAPIDS provides the GPU acceleration for data science. RAPIDS focuses on GPU acceleration for the entire AI and data science workflow from end-to-end. Importantly, it interfaces to Python above it and uses the CUDA libraries below it. This combination delivers the familiar Python environment on top of the GPU-optimized CUDA platform.

The libraries in RAPIDS deliver GPU acceleration for key functionality. The cuDF library is a Panda-like data frame manipulation library. The cuML is a set of machine learning libraries. It contains GPU accelerated algorithms like those available in scikit-learn.

The cuGRAPH library is an accelerated graph analytics library like NetworkX.

RAPIDS integrates with Apache Arrow and has support for columnar, in-memory data structures. This Apache Arrow integrations means that the data from RAPIDS can be used seamlessly by many deep learning frameworks such as Chainer, PyTorch, DLPack, TensorFlow, and MXNet.

Because RAPIDS works well with Python, it also works well with many data science visualization libraries. The RAPIDS developers continue to work on closer integration with visualization libraries. When these libraries leverage the native GPU in-memory formats, they can achieve high-performance rendering even with large data sets.

RAPIDS adds the GPU acceleration to the software stack and Dask adds the scaling. Dask scales the software stack to multi-GPU and multi-threaded CPU environments. Dask is able to scale Python, RAPIDS, and a whole host of libraries.

This software stack is complete.

- It includes programming languages preferred for AI and data science.

- It contains deep learning frameworks.

- It includes a GPU accelerated core.

- It scales from single workstations to data center clusters.

Accelerate. Scale. Deploy.

RAPIDS provides GPU accelerated libraries and Dask provides scaling from one workstation to a data center filled with GPU accelerated servers. NVIDIA Docker provides GPU accelerated portability. This combination is critical for data science workloads.

Dask scales Python workloads. It scales from a single notebook computer to data center clusters. Matthew Rocklin is the original author. The software schedules individual Python workloads distributing them across the available computing resources. That could be multiple cores in a single CPU to 1000 nodes in a data center super computer.

Dask is an open source project. As part of the open source community, the project coordinates with other relevant projects like Numpy, Pandas, and Scikit-Learn.

Without Dask, data scientists often discovered that their initial Python work would not scale. Dask integrates easily into Python so that data scientists do not need to rewrite their code using other tools and programming languages when they want to scale-up a project.

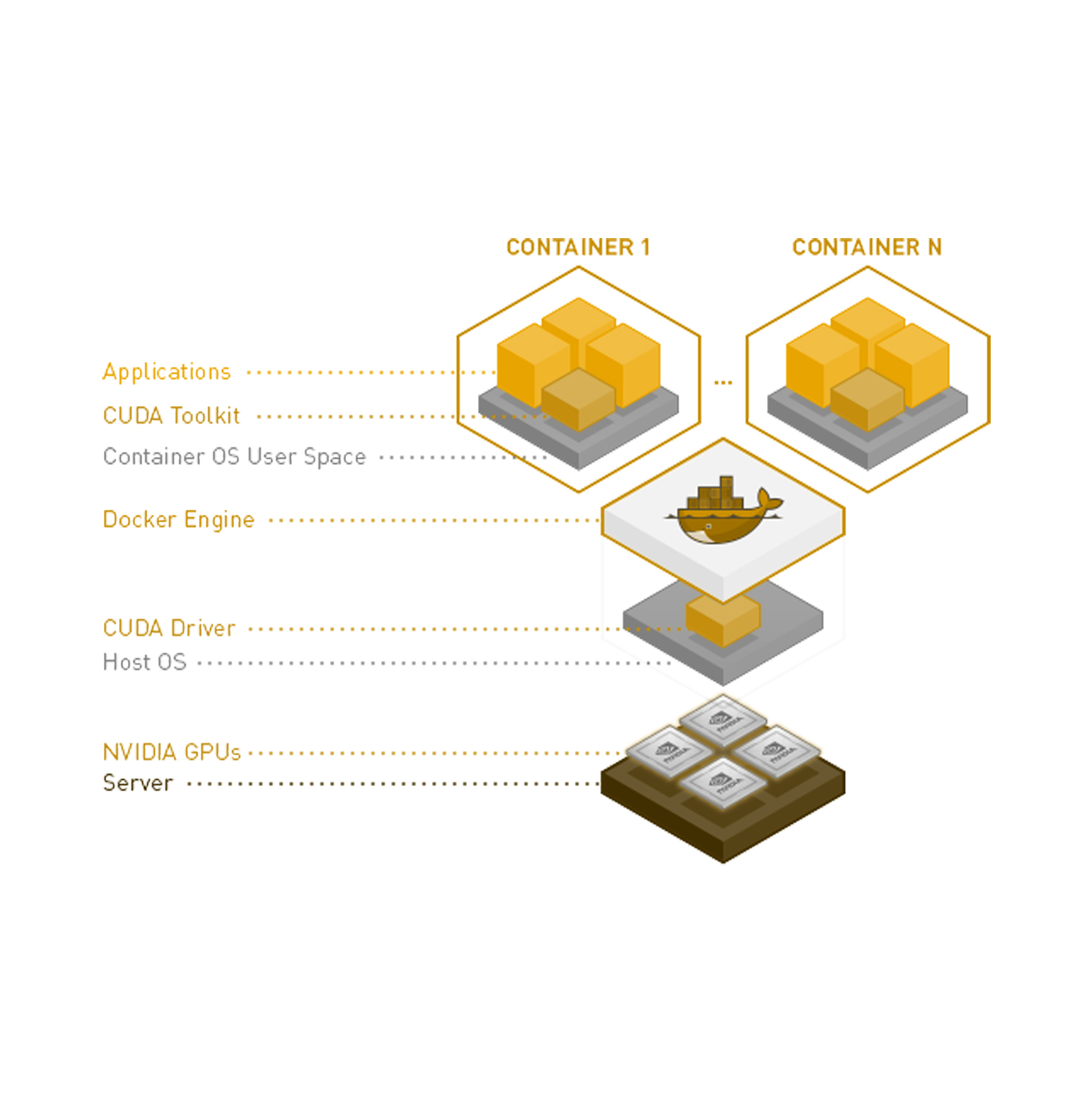

Containerisation is used across the IT industry. It facilitates the development and deployment of any application across an infrastructure of heterogeneous computing systems. Docker is the most widely used container.

Being hardware agnostic is a problem, however, when data scientists want to leverage specific hardware acceleration such as GPUs. Early work-arounds broke easily, NVIDIA added two enhancements to integrate GPU acceleration into portable Docker containers.

The result is a wrapper around the Docker container that initializes the system correctly at run time. This enables GPU computing in the Docker container.

Combining the capabilities of GPU acceleration with RAPIDS, scaling with Dask, and portability with NVIDIA Docker with a popular data science programming language in Python and a host of machine learning frameworks, creates a fully functional and attractive software stack for AI and data science.

How fast is it?

The Scan Computers 3XS Data Science Workstation is loaded with just the right hardware. I took an indepth look at the performance in the article, A Data Science Workstation with Experts Pre-installed.

II'd like to discuss performance in the context of scaling performance for AI applications. In particular with the results of the Big LSTM training test.

Docker containers: NVIDIA Docker

A problem every company faces when developing and running projects on a Data Science Workstation is deploying those projects on other workstations or on AI and HPC servers. Docker containers can help. Docker delivers portability. That portability is possible, in part, because Docker containers are hardware agnostic. This will be a problem for AI and data science projects which require GPU acceleration.

Therefore, NVIDIA Docker is a critical part of the Data Science Workstation’s software stack. In order to gain the value in development and deployment that Docker containers provide, it is necessary to use GPU accelerated containers.

Making containers run seamlessly with GPU acceleration, however, is not so obvious. And the configuration for GPU containers is a problem that every customer needs to solve.

NVIDIA uses containers in their own research and development. Since the company has already solved the problem that every client will face, it makes perfect sense that GPU accelerated Docker containers are built into the pre-installed software stack.

NVIDIA Docker adds two items to standard Docker containers. It includes driver

independent CUDA images in the container. And it loads the necessary driver components into the container at launch time.

By doing so, NVIDIA Docker enables portable, GPU accelerated containers. With NVIDIA Docker, you can develop on a Data Science Workstation and deploy across a range of GPU accelerated computing resources.

The Quadro RTX 8000 boards each have 48 GB of graphics memory. The NVLink allows for a 100 GB/S transfer between the GPUs. It also creates a flat memory space so that the GPUs and the CPUs can share and access memory - GPU memory as well as system memory.

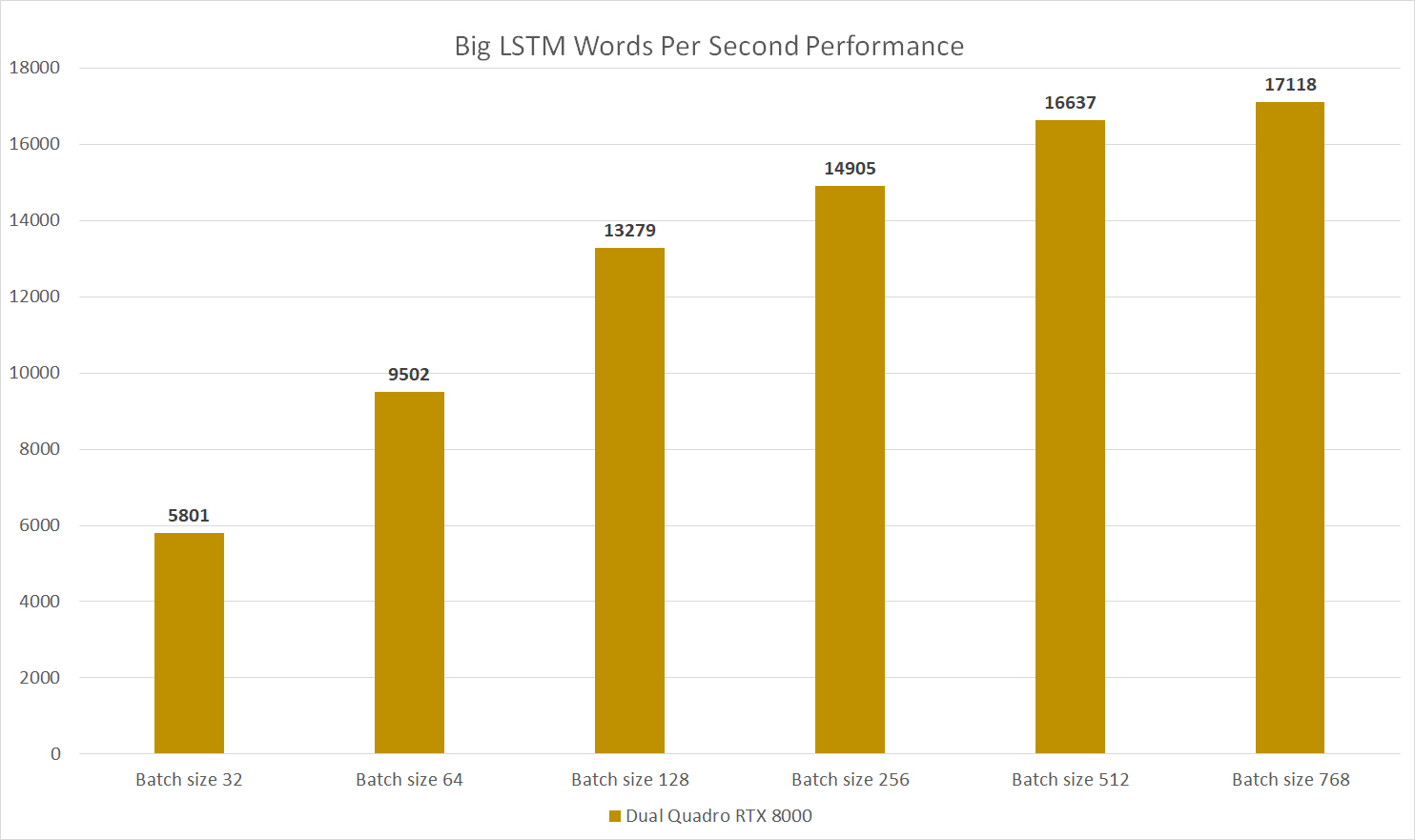

The command to launch Big LSTM contains a batch parameter. This parameter must be set to a smaller value for GPUs with less memory and can be set to higher values for GPUs with more memory. The workload still uses all of the Quadro RTX's 48 GB of memory, however, it is able to use the largest batch size. A GPU with less memory, must use a smaller batch size.

I ran the test multiple times with batch values from small to large. I wanted to measure the potential difference in performance.

There is also a parameter that selects the number of GPUs to use for the test. With this I was able to measure the performance difference between a single GPU and dual GPUs.

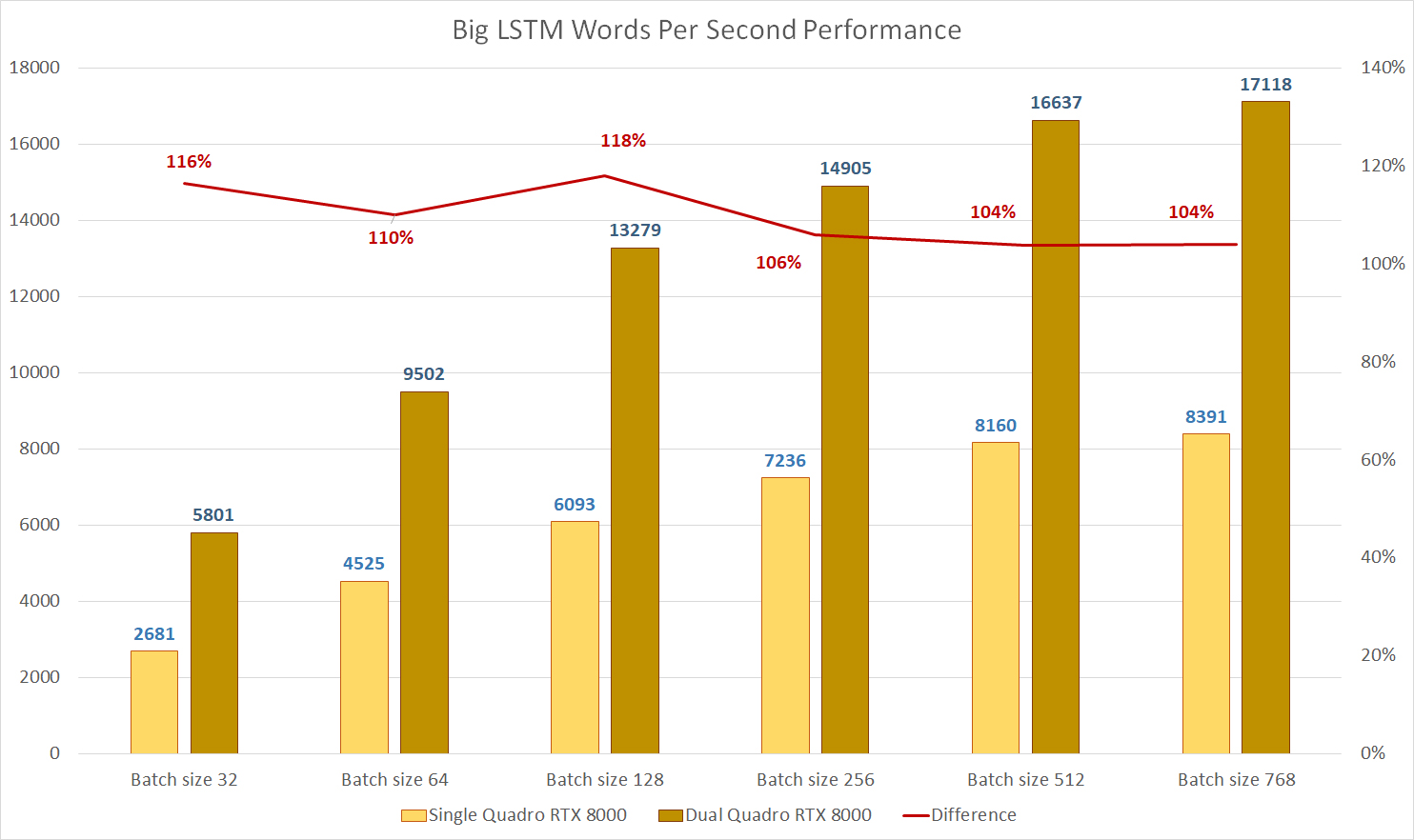

Big LSTM performance with variable batch sizes

This test trains a network for natural language processing. Here I use different batch sizes for the test. This simulates performance dependent on memory size.

The performance with the largest batch size was nearly 3x faster than performance with the smallest batch size.

Big LSTM performance: single vs dual GPU

These results measure the performance difference between a single GPU and two GPUs. I expected performance scaling to be in the range of 90%.

The performance with dual GPUs remained consistently above 100% I saw a similar result in the TensorFlow tests.

The Quadro RTX 8000 boards each have 48 GB of graphics memory. The NVLink allows for a 100 GB/S transfer between the GPUs. It also creates a flat memory space so that the GPUs and the CPUs can share and access memory - GPU memory as well as system memory.

The command to launch Big LSTM contains a batch parameter. This parameter must be set to a smaller value for GPUs with less memory and can be set to higher values for GPUs with more memory. The workload still uses all of the Quadro RTX's 48 GB of memory, however, it is able to use the largest batch size. A GPU with less memory, must use a smaller batch size.

I ran the test multiple times with batch values from small to large. I wanted to measure the potential difference in performance.

There is also a parameter that selects the number of GPUs to use for the test. With this I was able to measure the performance difference between a single GPU and dual GPUs.

Expertise at your fingertips

A Scan Data Science Workstation has the right hardware and the right software. And Scan have the expertise you need to make the most of it. Scan have the IT experts to get you up and running fast. And to keep you running at full speed, Scan have in-house data scientists and technical specialists.

Scan work with software partners in AI and data science and offer access to many of the products in their portfolio through their Deep Learning Proof of Concept program.

OmniSci, Robovision, and Kinetica are among the software parters at Scan. OmniSci and Kinetica provide GPU accelerated analytics for extremely large databases. Whether you need to analyse geospatial data or need to make sense of a continuous stream of IoT data one of these companies can help.

Robovision helps you build computer vision applications fast. And the company has products to classify and label your data more effectively. The platform includes tools for continuous improvement of your AI systems with built-in evaluation capabilities.

The Scan Data Science Workstation comes with professional support by a team with years of experience in AI and Data Science.

These are just three examples among many of Scan software partners. You understand already that a company cannot be an expert in AI and data science if they are not familiar with the applications. Scan know many application areas and many ISVs. It's part of what makes the company a good partner for you.

Evolving with Your Computing

Scan delivers a turnkey solution. Scan has in-house experts to accompany your projects. And Scan also can support your evolving needs in computing.

When projects scale up and you need GPU-servers, then Scan can help. When you want cloud workstations, then Scan can help. When you need a high-performance hosted data center, then Scan can help. When you need specialised application, then Scan can help.

Why do you need a data science workstation from Scan? Scan have the years of experience, the in-house expertise, and the product lines that you might need as your computing requirements in AI and Data Science grow. As your computing needs grow, Scan grows with you.

A Final Perspective

Data Science, AI technology and HPC projects need special solutions. A good solution is more than fast hardware. It is fast hardware that is balanced across the system. Equally important is having a complete software stack. In-house expertise rounds out the package.

The software stack is critical and the NVIDIA Data Science Workstation from Scan has a complete, accelerated, and tested software stack pre-installed. It offers a familiar programming environment in Python, a GPU accelerated core with RAPIDS and CUDA, and a scalable architecture with Dask.

The hardware is top-shelf, enterprise-grade hardware throughout. And your team will be well-served through Scan's support - support at both the IT level and at the project level.

Your Time Is Valuable

At Professional Workstation, we appreciate your time. And we appreciate when workstation users like you share how you work, when you tell us what you use for hardware, and when you give us your opinion.

Thank You!

To give back to users like you, we'll give away a fast, durable external hard drive to a lucky survey participant. It's optional of course, but we'll add your name to the drawing for each survey that you complete.

Subscribe!

And, if you sign up for our newsletter below, then we'll add your name one more time.

Thank you for participating in the Professional Workstation surveys!

You could win...

You might win one of the fast external hard drives that we're giving away like this G-Technology, G-DRIVE Mobile Pro SSD.

We'll give away a fast, durable external hard drive to a lucky survey participant.

We'll add your name for each survey. And if you sign up for our newsletter below, then we'll add your name one more time.